Prototypical Networks Protonets

Tags: #machine learning #meta learningEquation

$$c_{k}=\frac{1}{|S_{k}|}\sum_{(x_{i},y_{i}) \in S_{k}} f_{\phi}(x) \\ p_{\phi}(y=k|x)=\frac{\exp(-d(f_{\phi}(x), c_{k}))}{\sum_{k^{'}} \exp(-d(f_{\phi}(x), c_{k^{'}})} \\\min J(\phi)=-\log p_{\phi}(y=k|x)$$Latex Code

c_{k}=\frac{1}{|S_{k}|}\sum_{(x_{i},y_{i}) \in S_{k}} f_{\phi}(x) \\ p_{\phi}(y=k|x)=\frac{\exp(-d(f_{\phi}(x), c_{k}))}{\sum_{k^{'}} \exp(-d(f_{\phi}(x), c_{k^{'}})} \\\min J(\phi)=-\log p_{\phi}(y=k|x)

Have Fun

Let's Vote for the Most Difficult Equation!

Introduction

Equation

Latex Code

c_{k}=\frac{1}{|S_{k}|}\sum_{(x_{i},y_{i}) \in S_{k}} f_{\phi}(x) \\ p_{\phi}(y=k|x)=\frac{\exp(-d(f_{\phi}(x), c_{k}))}{\sum_{k^{'}} \exp(-d(f_{\phi}(x), c_{k^{'}})} \\\min J(\phi)=-\log p_{\phi}(y=k|x)

Explanation

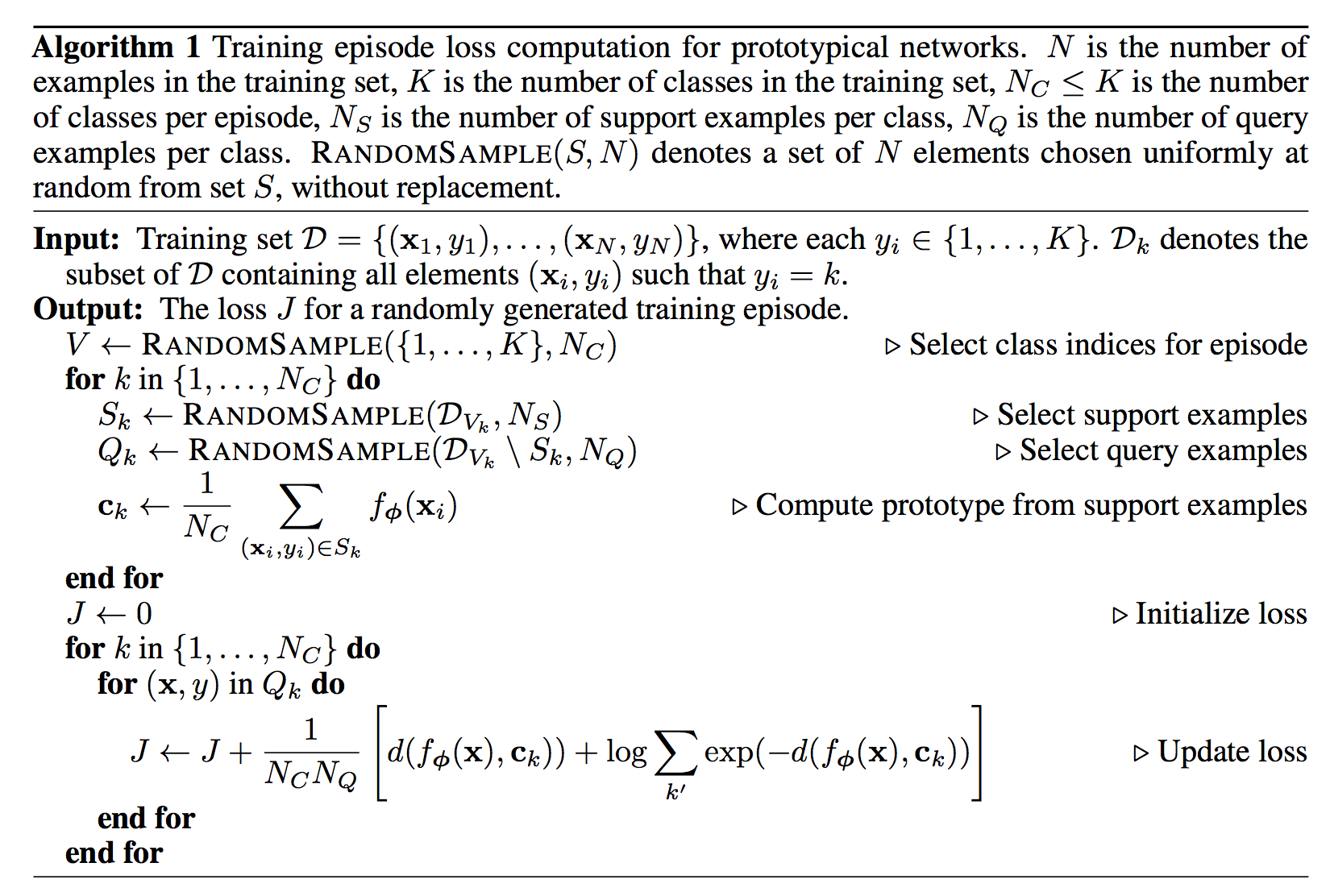

Prototypical networks compute an M-dimensional representation c_{k} or prototype, of each class through an embedding f_{\phi}(.) with parameters \phi. Each prototype is the mean vector of the embedded support points belonging to its class k. Prototypical networks then produce a distribution over classes for a query point x based on a softmax over distances to the prototypes in the embedding space as p(y=k|x). Then the negative log-likelihood of J(\theta) is calculated over query set.

Related Documents

- See paper Prototypical Networks for Few-shot Learning for more detail.

Related Videos

Prototypical Networks as Mixture Density Estimation

Bregman divergences

d_{\phi}(z,z^{'})=\phi(z) - \phi(z^{'})-(z-z^{'})^{T} \nabla \phi(z^{'})

Mixture Density Estimation

p_{\phi}(y=k|z)=\frac{\pi_{k} \exp(-d(z, \mu (\theta_{k})))}{\sum_{k^{'}} \pi_{k^{'}} \exp(-d(z, \mu (\theta_{k})))}

Explanation

The prototypi- cal networks algorithm is equivalent to performing mixture density estimation on the support set with an exponential family density. A regular Bregman divergence d_{\phi} is defined as above. \phi is a differentiable, strictly convex function of the Legendre type. Examples of Bregman divergences include squared Euclidean distance and Mahalanobis distance.

Reply