Information

# XR Blocks

[](https://www.npmjs.com/package/xrblocks)

[](https://bundlephobia.com/result?p=xrblocks)

#### JavaScript library for rapid XR and AI prototyping

[Site](https://xrblocks.github.io/) —

[Manual](https://xrblocks.github.io/docs/) —

[Templates](https://xrblocks.github.io/docs/templates/Basic/) —

[Demos](https://xrblocks.github.io/docs/samples/ModelViewer/) —

[YouTube](https://www.youtube.com/watch?v=75QJHTsAoB8) —

[arXiv](https://arxiv.org/abs/2509.25504) —

[Blog](https://research.google/blog/xr-blocks-accelerating-ai-xr-innovation/)

### Description

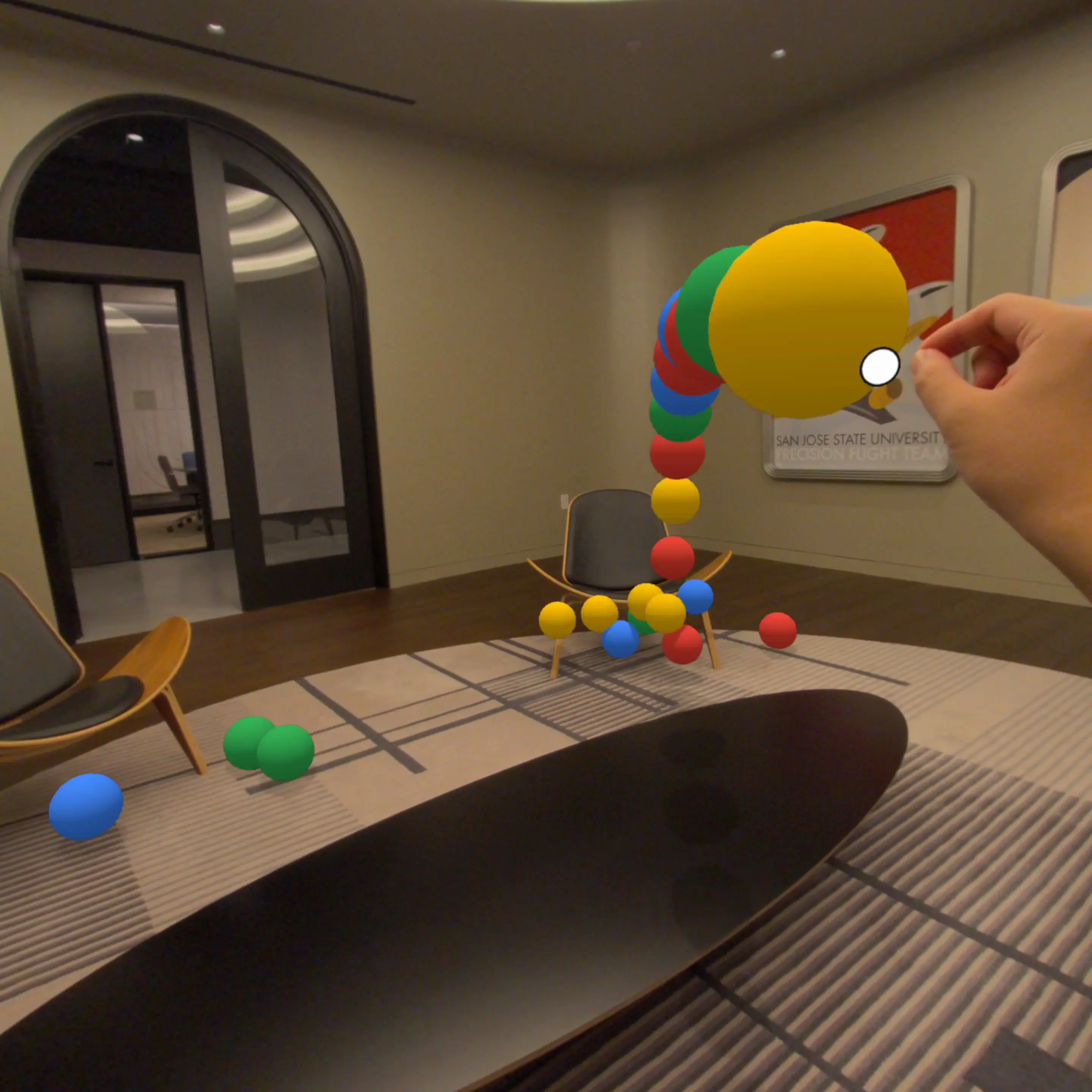

**XR Blocks** is a lightweight, cross-platform library for rapidly prototyping

advanced XR and AI experiences. Built upon [three.js](https://threejs.org), it

targets Chrome v136+ with WebXR support on Android XR (e.g.,

[Galaxy XR](https://www.samsung.com/us/xr/galaxy-xr/galaxy-xr/)) and also

includes a powerful desktop simulator for development. The framework emphasizes

a user-centric, developer-friendly SDK designed to simplify the creation of

immersive applications with features like:

- **Hand Tracking & Gestures:** Access advanced hand tracking, custom

gestures with TensorFlow Lite / PyTorch models, and interaction events.

- **Gesture Recognition:** Opt into pinch, open-palm, fist, thumbs-up, point,

and spread detection with \`options.enableGestures()\`, tune providers or

thresholds, and subscribe to \`gesturestart\`/\`gestureupdate\`/\`gestureend\`

events from the shared subsystem.

- **World Understanding:** Present samples with depth sensing, geometry-aware

physics, and object recognition with Gemini in both XR and desktop simulator.

- **AI Integration:** Seamlessly connect to Gemini for multimodal

understanding and live conversational experiences.

- **Cross-Platform:** Write once and deploy to both XR devices and desktop

Chrome browsers.

We welcome all contributors to foster an AI + XR community! Read our

[blog post](https://research.google/blog/xr-blocks-accelerating-ai-xr-innovation/)

and [white paper](https://arxiv.org/abs/2509.25504) for a visionary roadmap.

### Usage

XR Blocks can be imported directly into a webpage using an importmap. This code

creates a basic XR scene containing a cylinder. When you view the scene, you can

pinch your fingers (in XR) or click (in the desktop simulator) to change the

cylinder's color. Check out

[this live demo](https://xrblocks.github.io/docs/templates/Basic/) with simple

code below:

\`\`\`html

Basic Example | XR Blocks

\`\`\`

### Development guide

Follow the steps below to clone the repository and build XR Blocks:

\`\`\`bash

# Clone the repository.

git clone --depth=1 git@github.com:google/xrblocks.git

cd xrblocks

# Install dependencies.

npm ci

# Build xrblocks.js.

npm run build

# After making changes, check ESLint and run Prettier

npm run lint # ESLint check

npm run format # Prettier format

\`\`\`

XR Blocks uses ESLint for linting and Prettier for formatting.

If coding in VSCode, make sure to install the [ESLint extension](https://marketplace.visualstudio.com/items?itemName=dbaeumer.vscode-eslint) and the [Prettier extension](https://marketplace.visualstudio.com/items?itemName=esbenp.prettier-vscode). Then set Prettier as your default formatter.

This is not an officially supported Google product, but will be actively

maintained by the XR Labs team and external collaborators. This project is not

eligible for the

[Google Open Source Software Vulnerability Rewards Program](https://bughunters.google.com/open-source-security).

### User Data & Permissions

When using specific features in this SDK (e.g., WebXR, hand tracking, camera),

users will be prompted with permission requests and the application may not

function as expected with denied permissions.

XR Blocks is an open source software development kit that does not handle data

by itself; however, the use of other APIs may collect user data and require user

permissions:

When using [WebXR](https://immersive-web.github.io/) and

[LiteRT](https://ai.google.dev/edge/litert) APIs (e.g., depth sensing, gesture

recognition), all data is stored and processed locally with on-device models.

When using AI features (e.g.,

[Gemini Live](https://gemini.google/overview/gemini-live), Gemini Flash), the

data will be sent to Gemini servers and please follow

[Gemini's Privacy & Terms](https://ai.google.dev/gemini-api/terms).

### Keep Your API Key Secure

This SDK does not require any API keys for non-AI samples. In specific AI use

cases, this SDK provides an interface to use cloud-hosted Gemini services with

XR experiences, requiring an API key from

[AI Studio](https://aistudio.google.com/app/apikey). Please follow

[this doc](https://ai.google.dev/gemini-api/docs/api-key#security) for best

practices to keep your API key secure.

Treat your Gemini API key like a password. If compromised, others can use your

project's quota, incur charges (if billing is enabled), and access your private

data, such as files.

#### Critical Security Rules

Never commit API keys to source control. Do not check your API key into version

control systems like Git.

Never expose API keys on the client-side. Do not use your API key directly in

web or mobile apps in production. Keys in client-side code (including our

JavaScript/TypeScript libraries and REST calls) can be extracted.

### Uninstallation

To remove XR Blocks from your code, simple remove the lines from your \`