OpenAI o1 Reviews for Code Reasoning Ability

Similar

Prompts

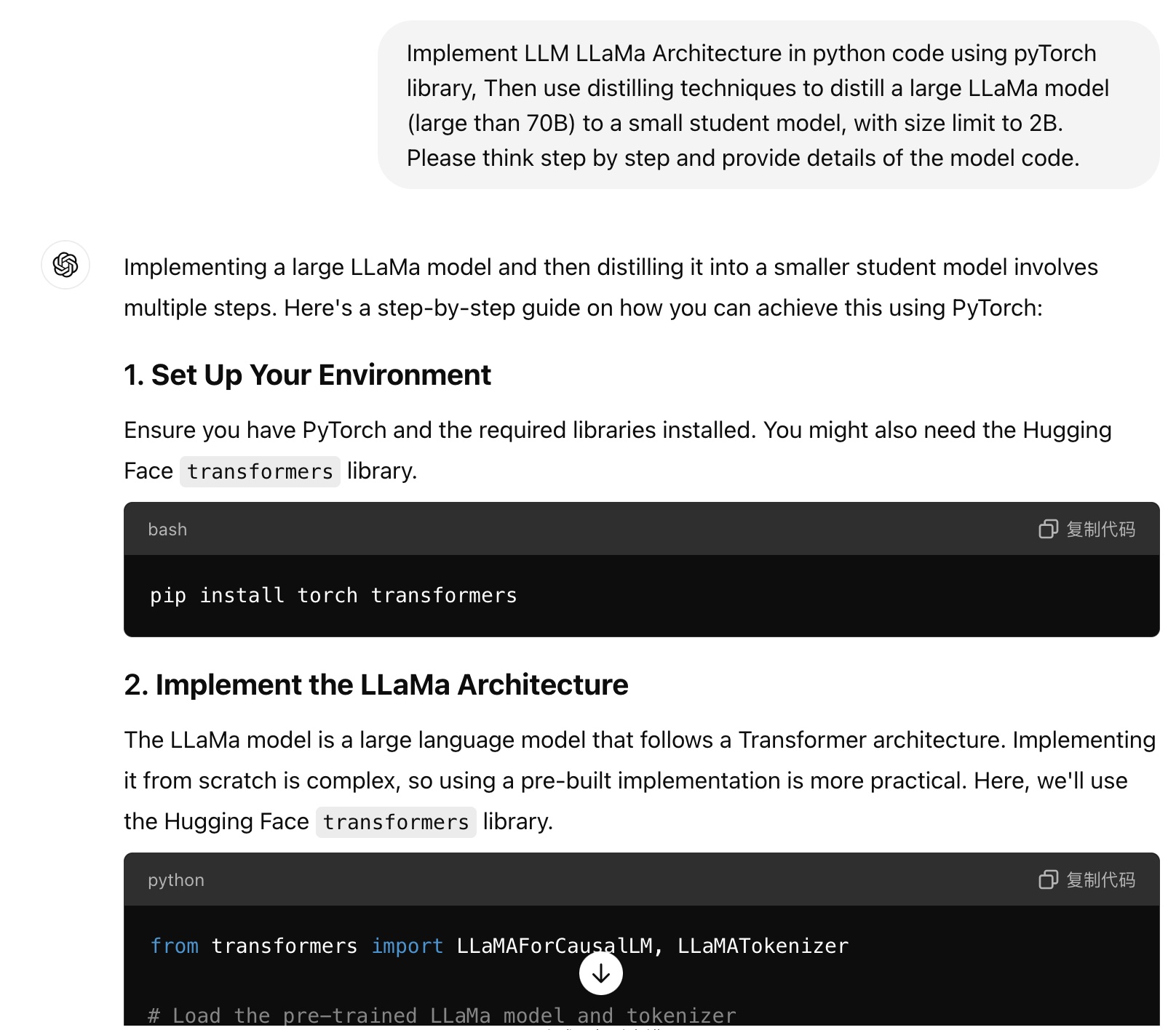

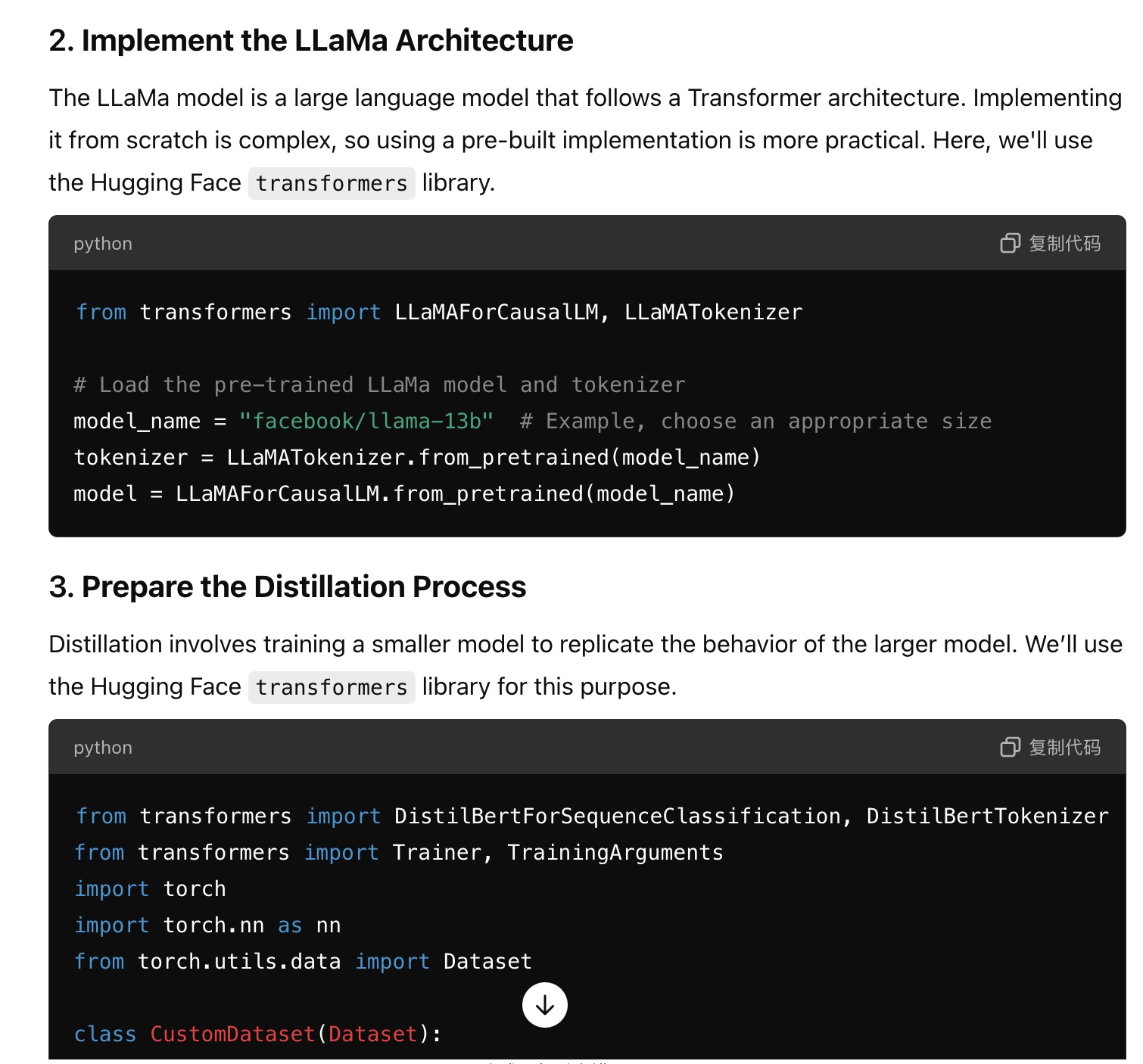

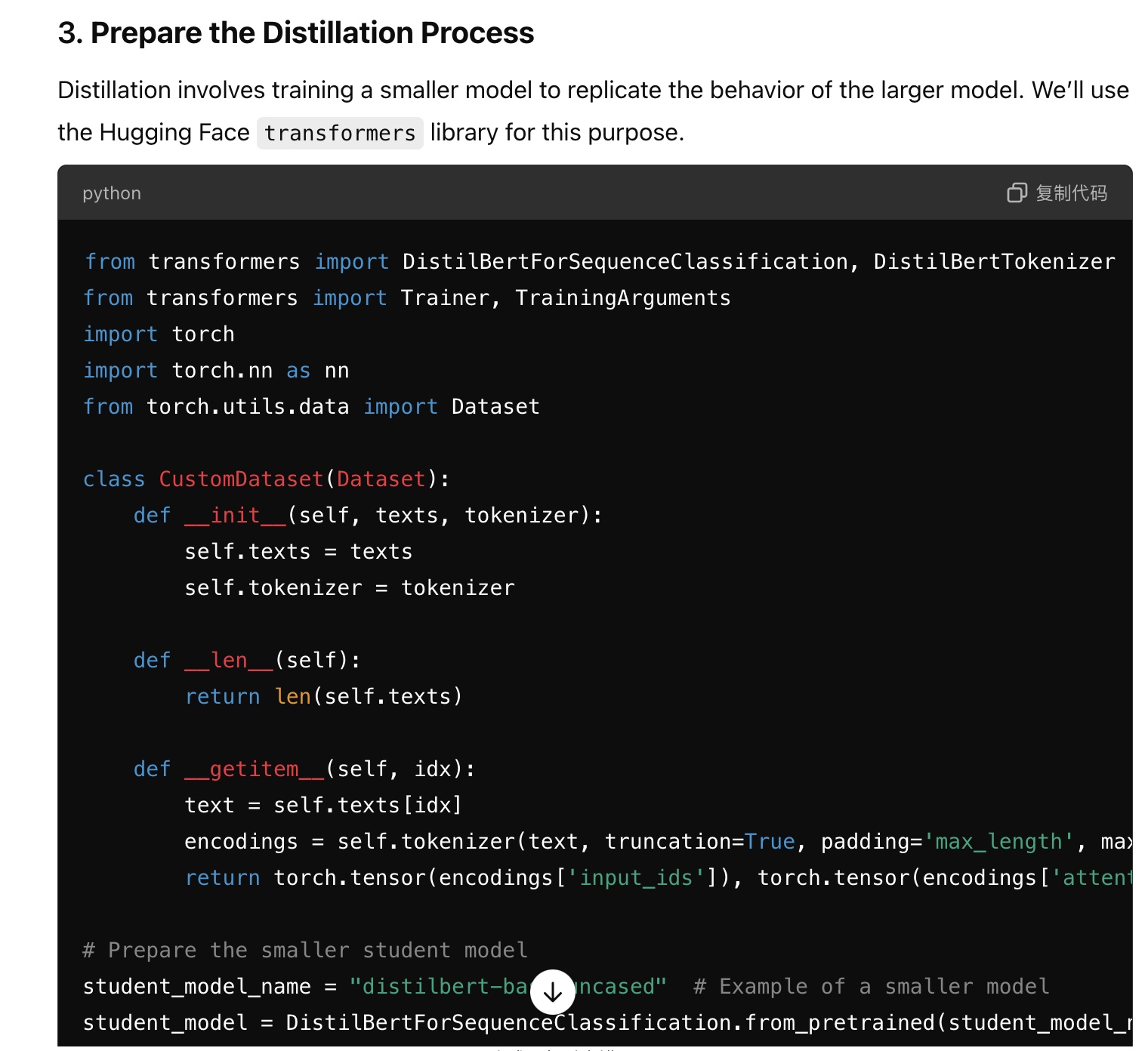

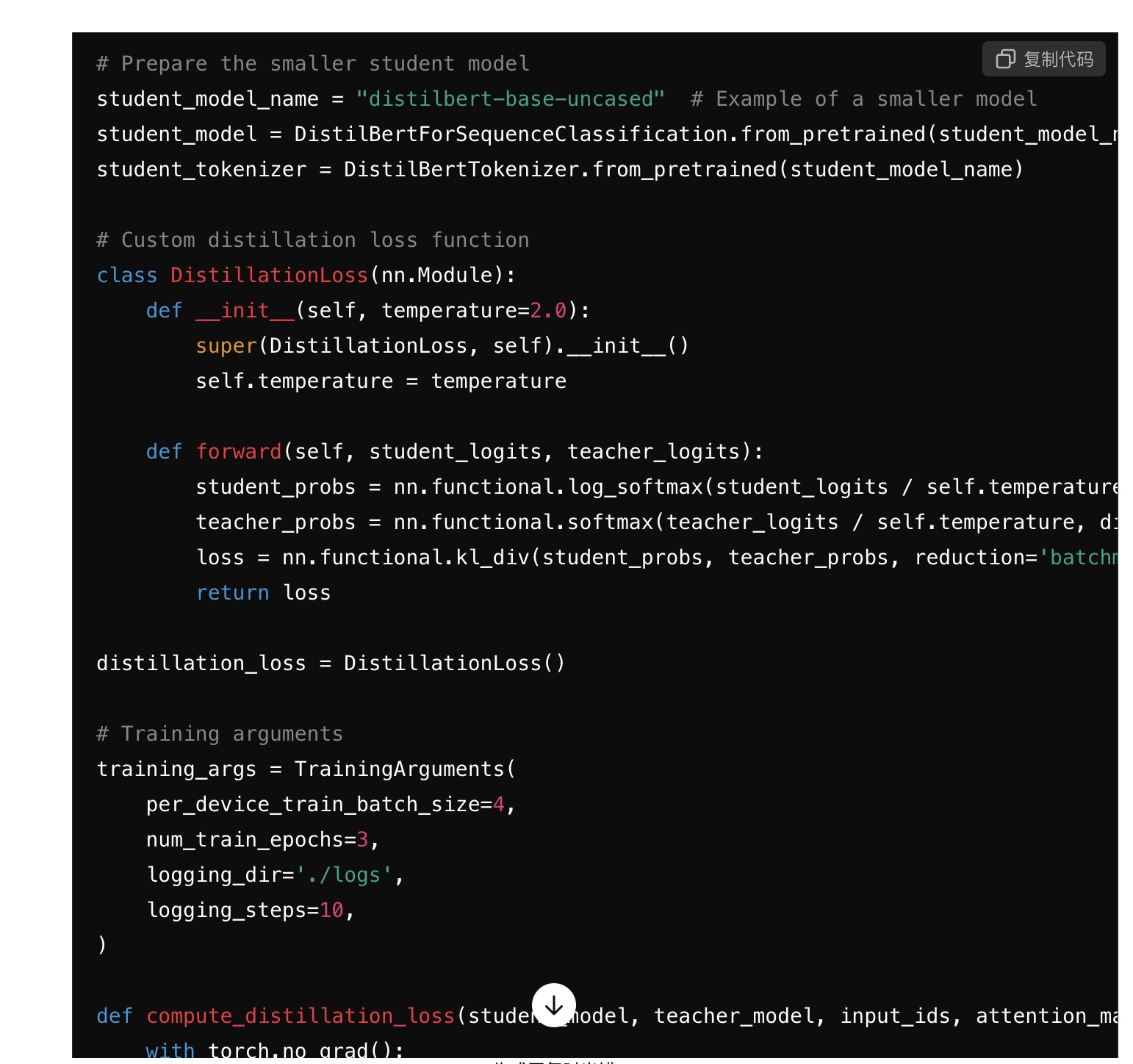

Implement LLM LLaMa Architecture in python code using pyTorch library, Then use distilling techniques to distill a large LLaMa model (large than 70B) to a small student model, with size limit to 2B. Please think step by step and provide details of the model code.

Write front end code of the login and logout pages for H5 mobile application usage. Split the code in separate files for css, html, and js.

Write a bash script that takes a matrix represented as a string with format '[1,2],[3,4],[5,6]' and prints the transpose in the same format.

Reviews

Tags

-

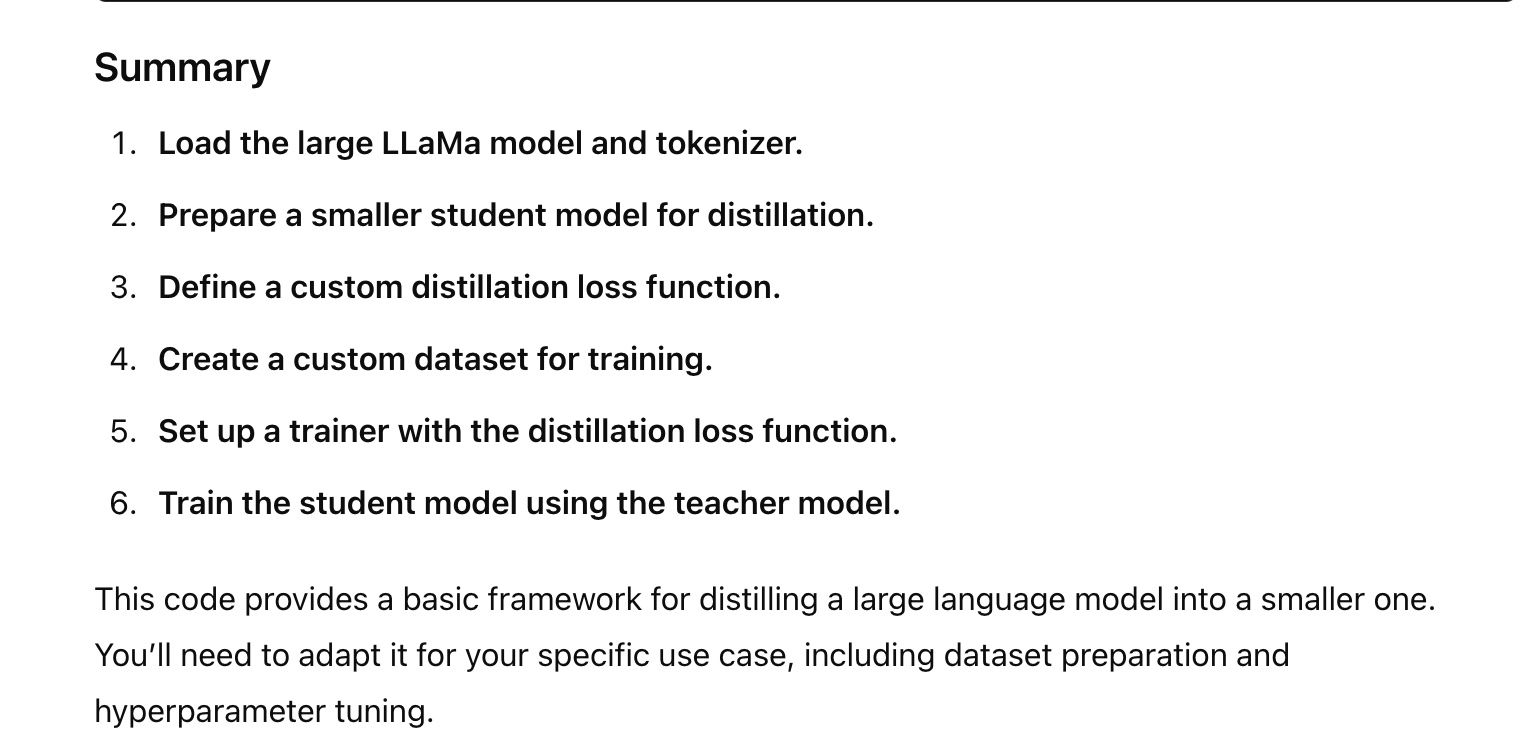

Prompt: Implement LLM LLaMa Architecture in python code using pyTorch library, Then use distilling techniques to distill a large LLaMa model (large than 70B) to a small student model, with size limit to 2B. Please think step by step and provide details of the model code.I asked the OpenAI o1 model to implement the LLaMa Architecture LLM in python code using pytorch with a distill function. The overall response is excellent. It breaks down the tasks into a few steps, including : 1. Set Up Your Environment 2. Implement the LLaMa Architecture 3. Prepare the Distillation Process And as for the code it self, it consists of a few sections, including: Load the large LLaMa model and tokenizer. Prepare a smaller student model for distillation. Define a custom distillation loss function. Create a custom dataset for training. Set up a trainer with the distillation loss function. Train the student model using the teacher model. I actually examined the distill loss coding, which is the KL Divergence between the student logits and the teacher logits. The results are correct. """ loss = nn.functional.kl_div(student_probs, teacher_probs, reduction='batchmean') """

![]()

![]()

![]()

![]()

![]()

![]()

-

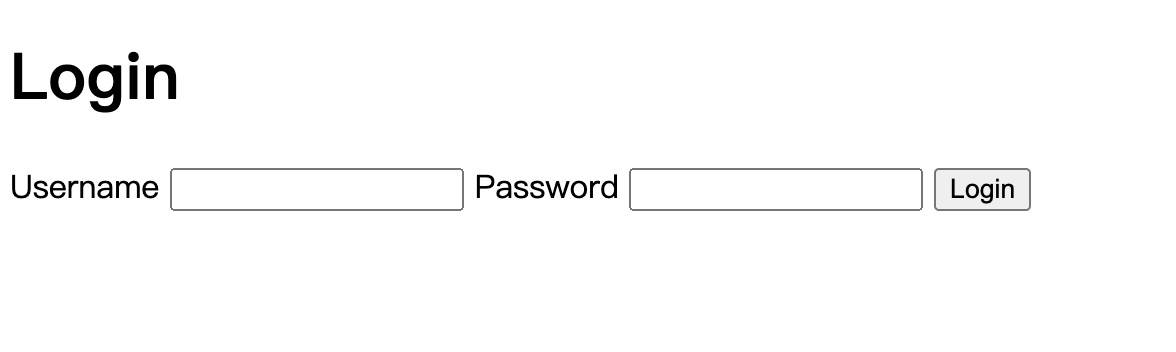

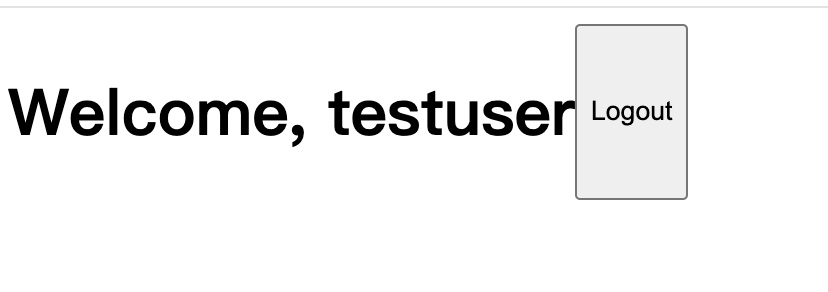

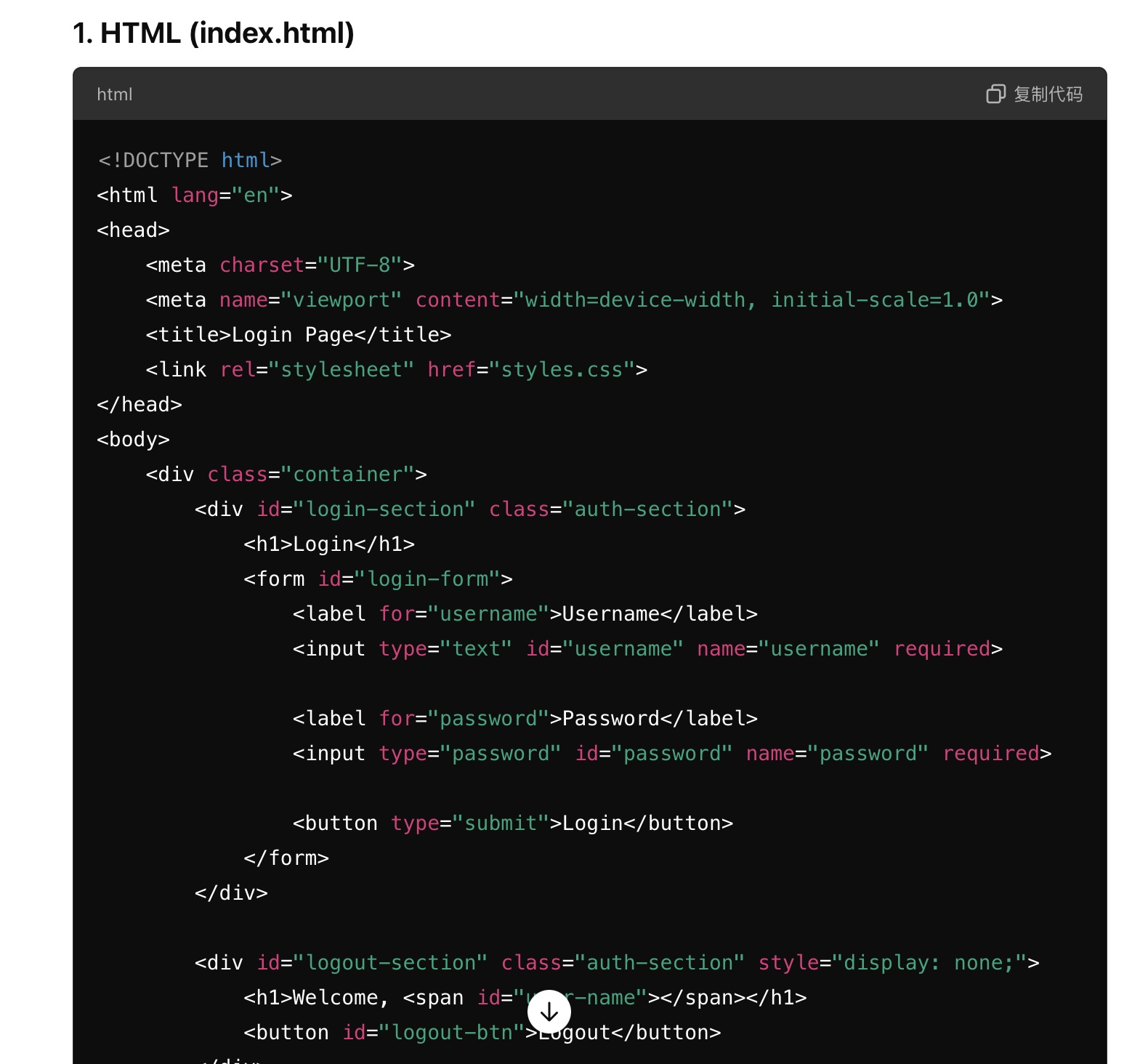

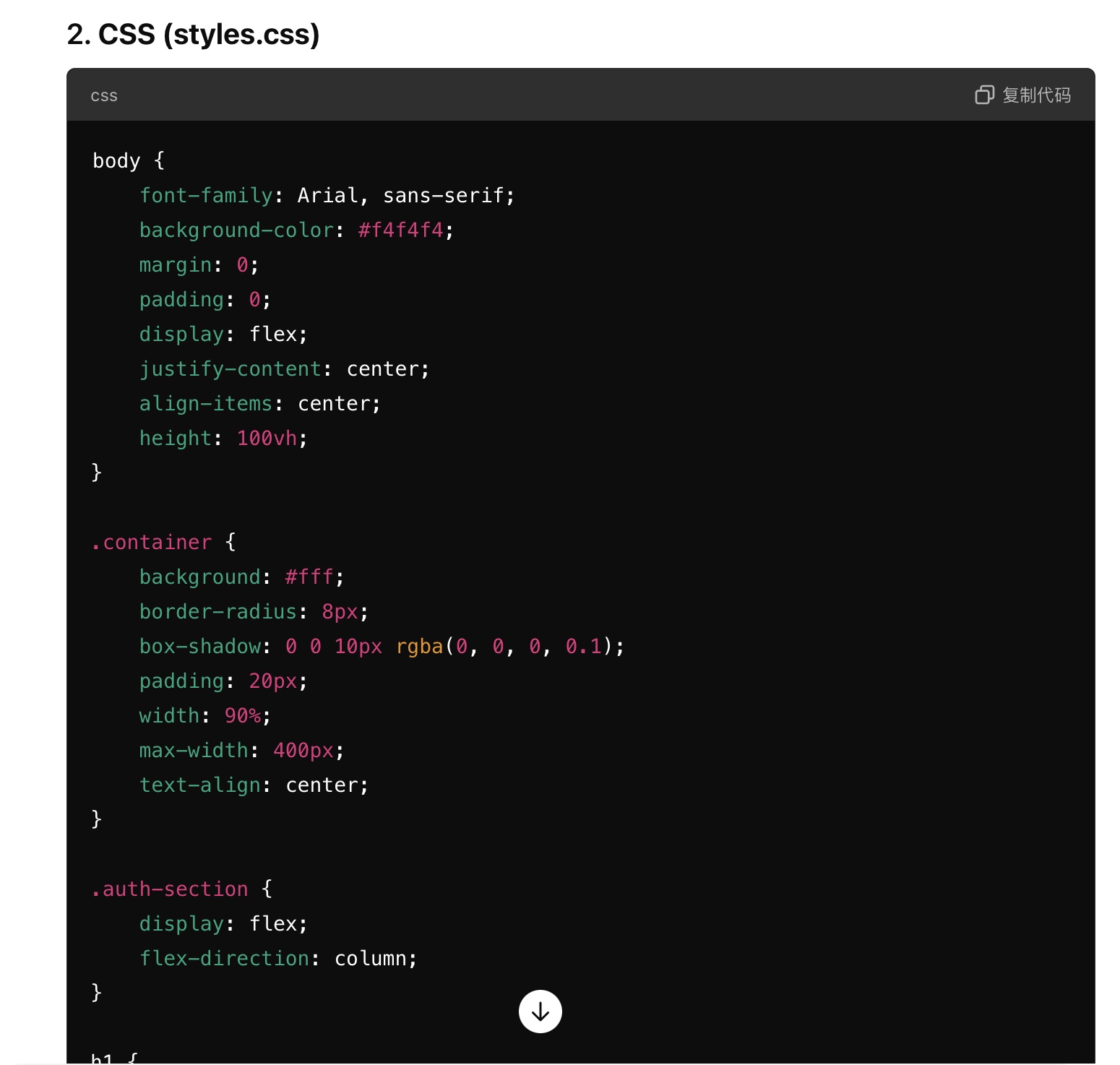

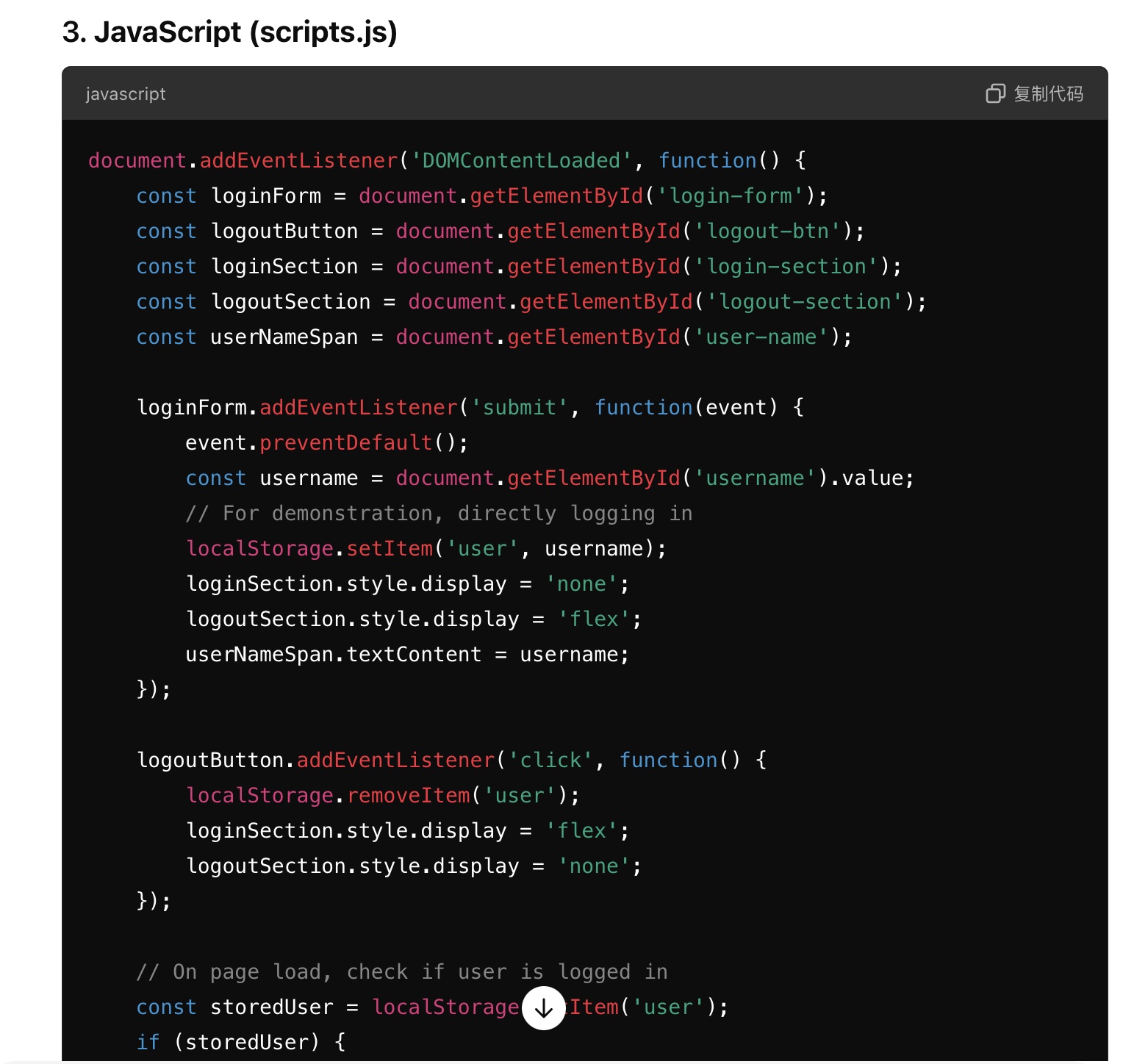

Prompt: Write front end code of the login and logout pages for H5 mobile application usage. Split the code in separate files for css, html, and js.I used the OpenAI o1 preview model to implement the frontend code of login and logout function of H5 mobile application and separate css, html and js code into separate files. The model's response to the front end code generation task is very helpful. And I actually copy and paste the code into a separate folder and tried it myself. The website front end is shown in the attached images. It is working to some extend, except that the CSS file is a little bit strange. The o1 model generates the code and also gives these explanations, including: index.html: Contains the structure of the login and logout pages. styles.css: Provides the styling for the pages to make them mobile-friendly. scripts.js: Handles the login and logout functionality. It uses localStorage to persist the logged-in state.

![]()

![]()

![]()

![]()

![]()

-

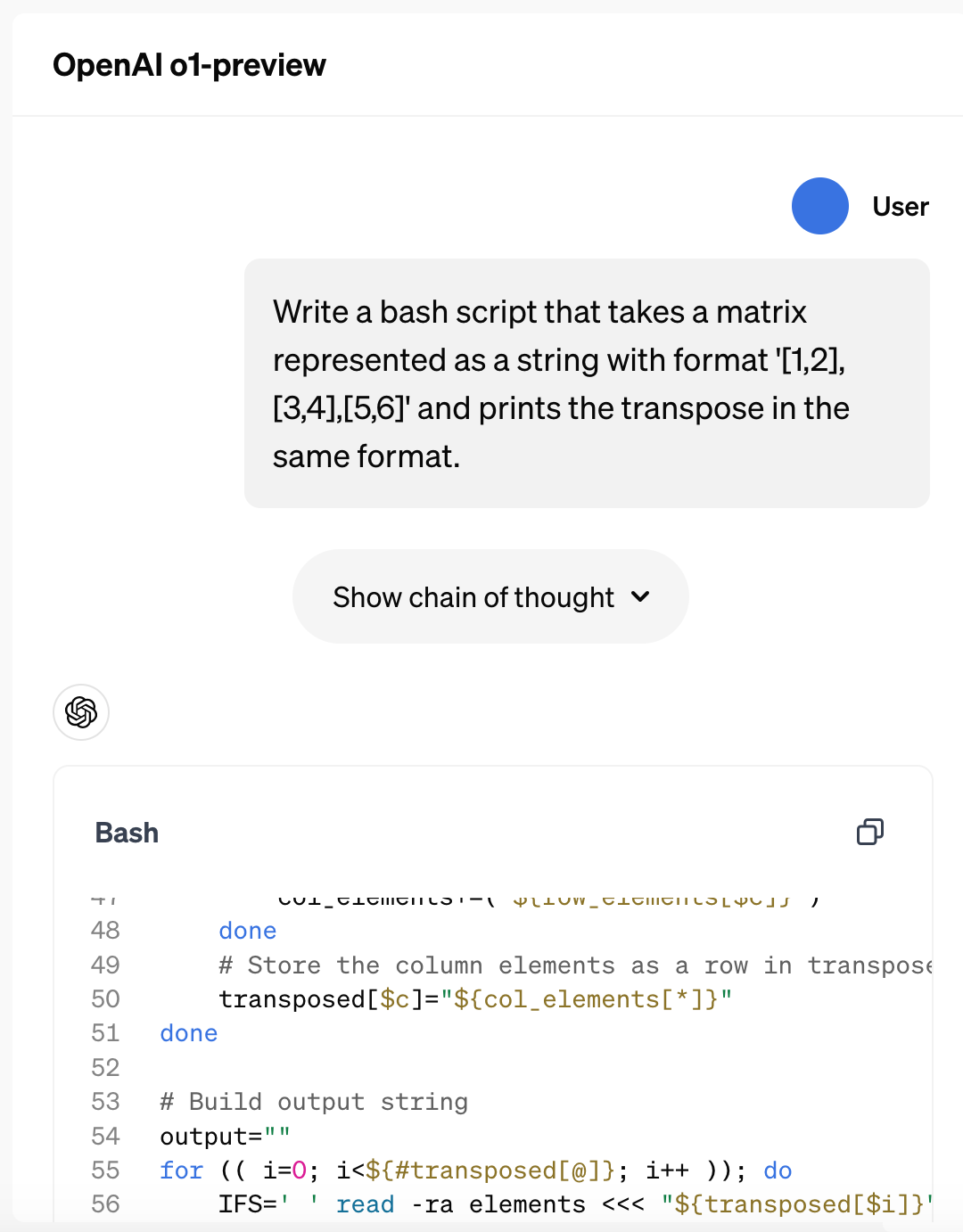

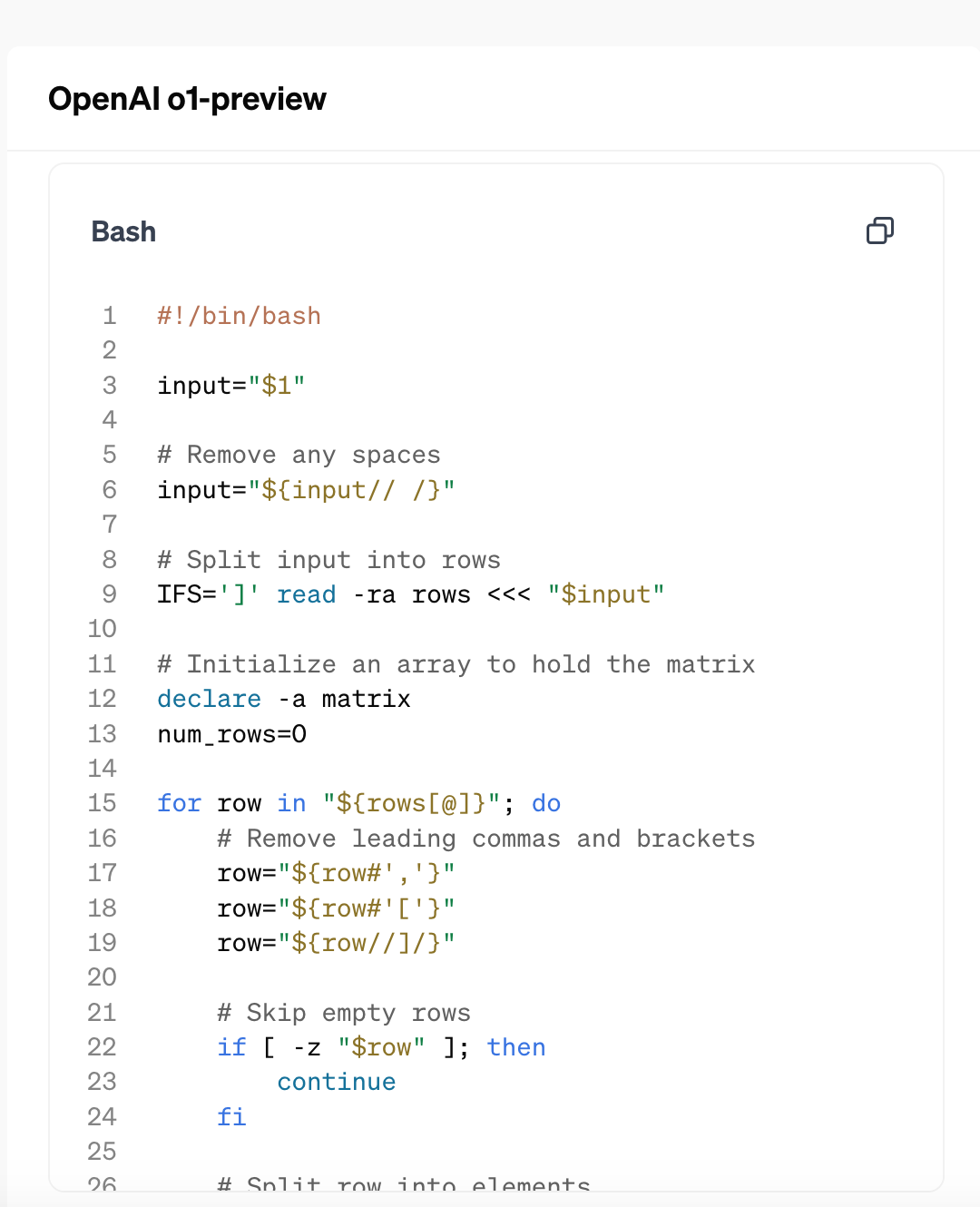

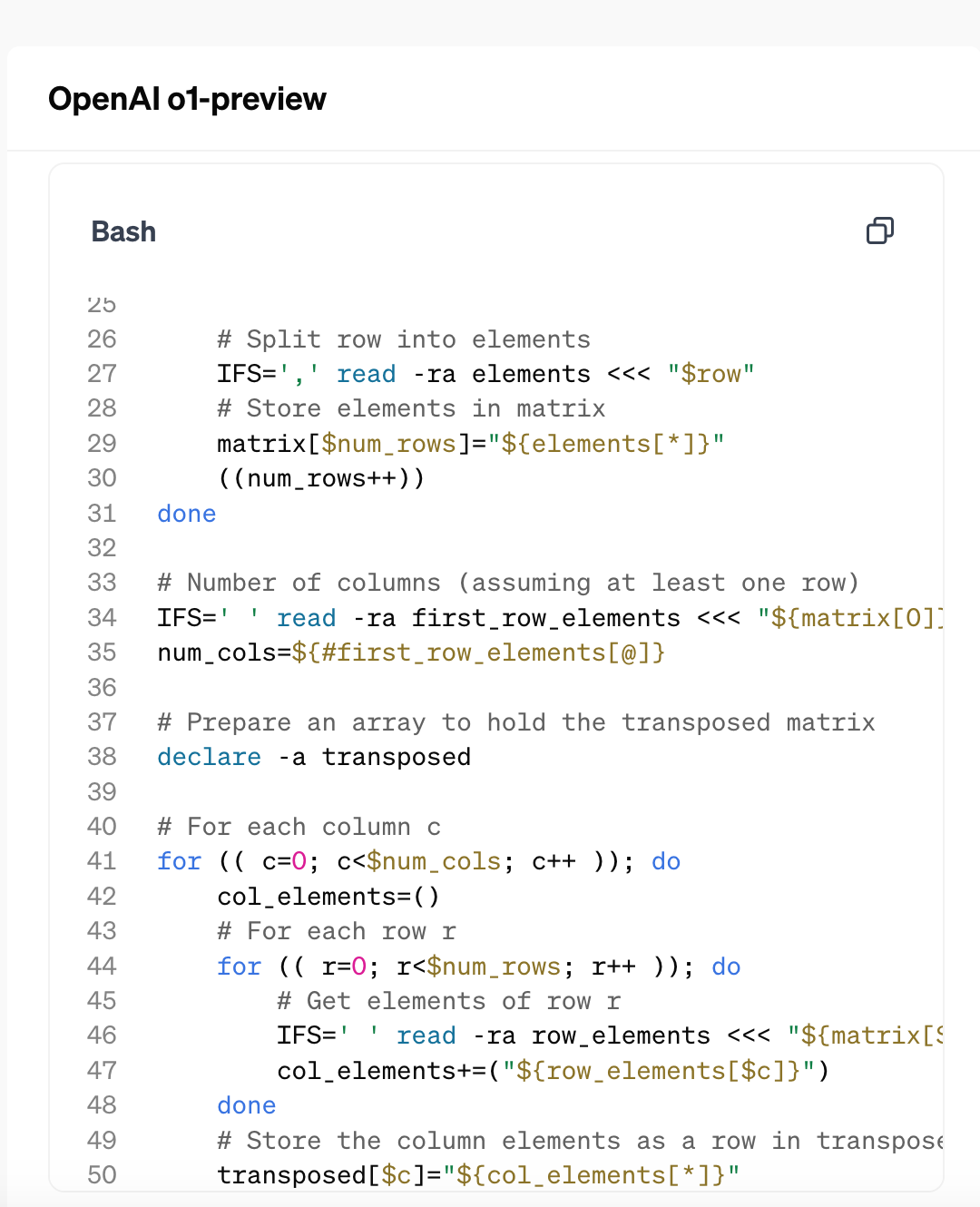

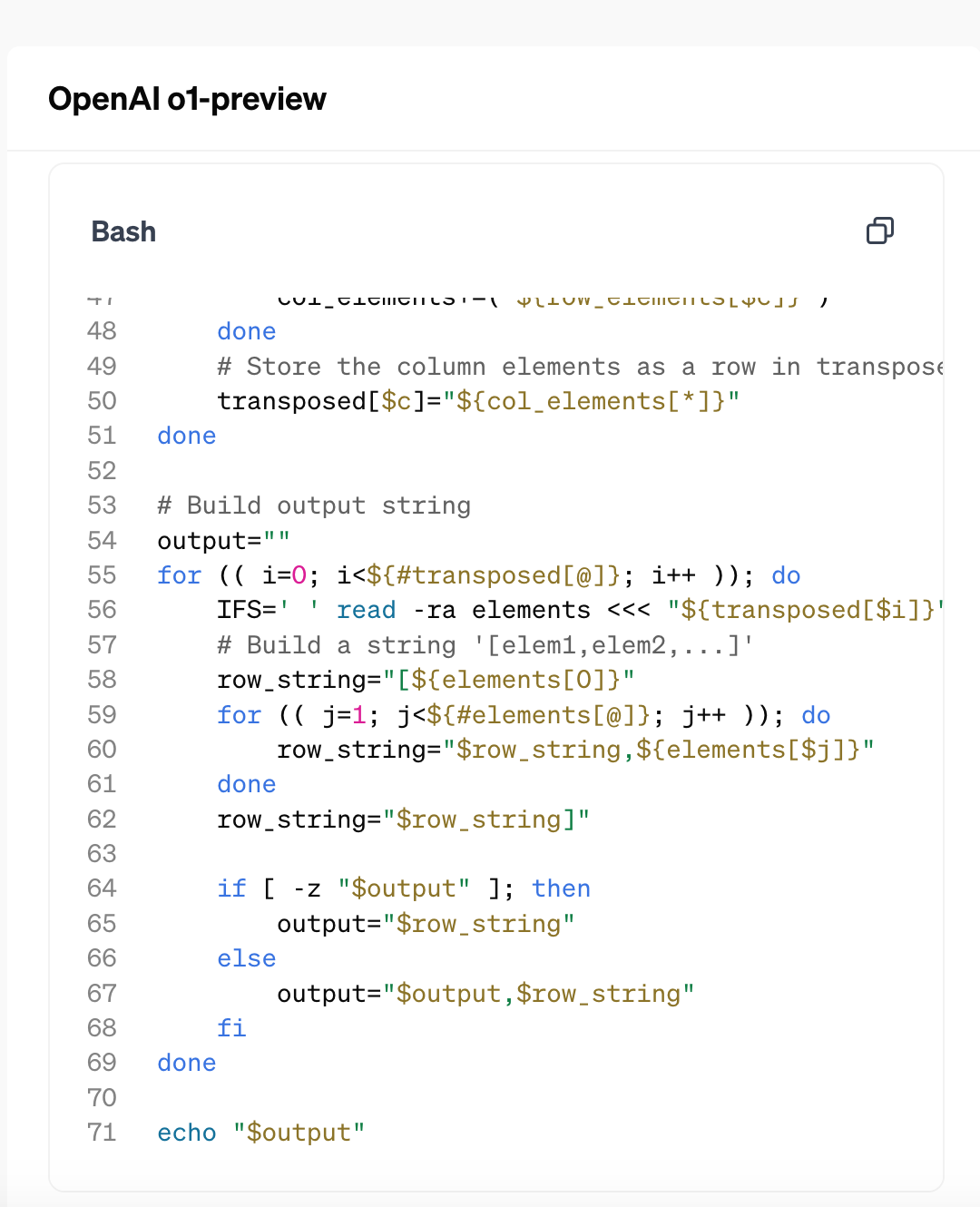

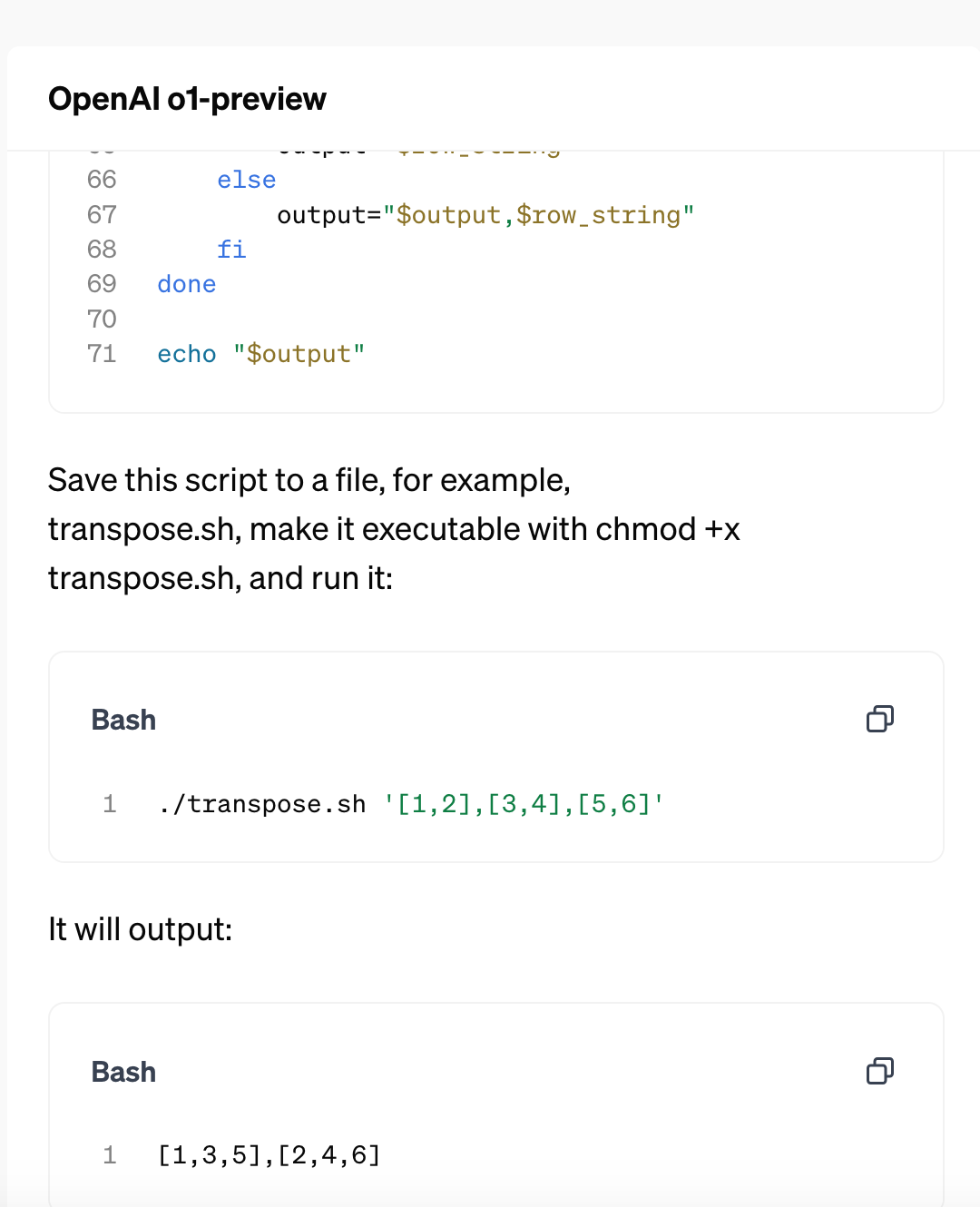

Prompt: Write a bash script that takes a matrix represented as a string with format '[1,2],[3,4],[5,6]' and prints the transpose in the same format.OpenAI o1 coding ability reviews of reasoning with LLM. In their official website, the prompt for OpenAI o1 is to "takes a matrix represented as a string with format '[1,2],[3,4],[5,6]' and prints the transpose in the same format."After comparing the results of o1 with GPT4o and the final scripts are actually much longer, o1 results have 70 lines of scripts but the GPT4o has only 31 lines of code. The key difference is how to "Build output string". Source: https://openai.com/index/learning-to-reason-with-llms/

![]()

![]()

![]()

![]()

![]()

Write Your Review

Detailed Ratings

-

Community

Reply