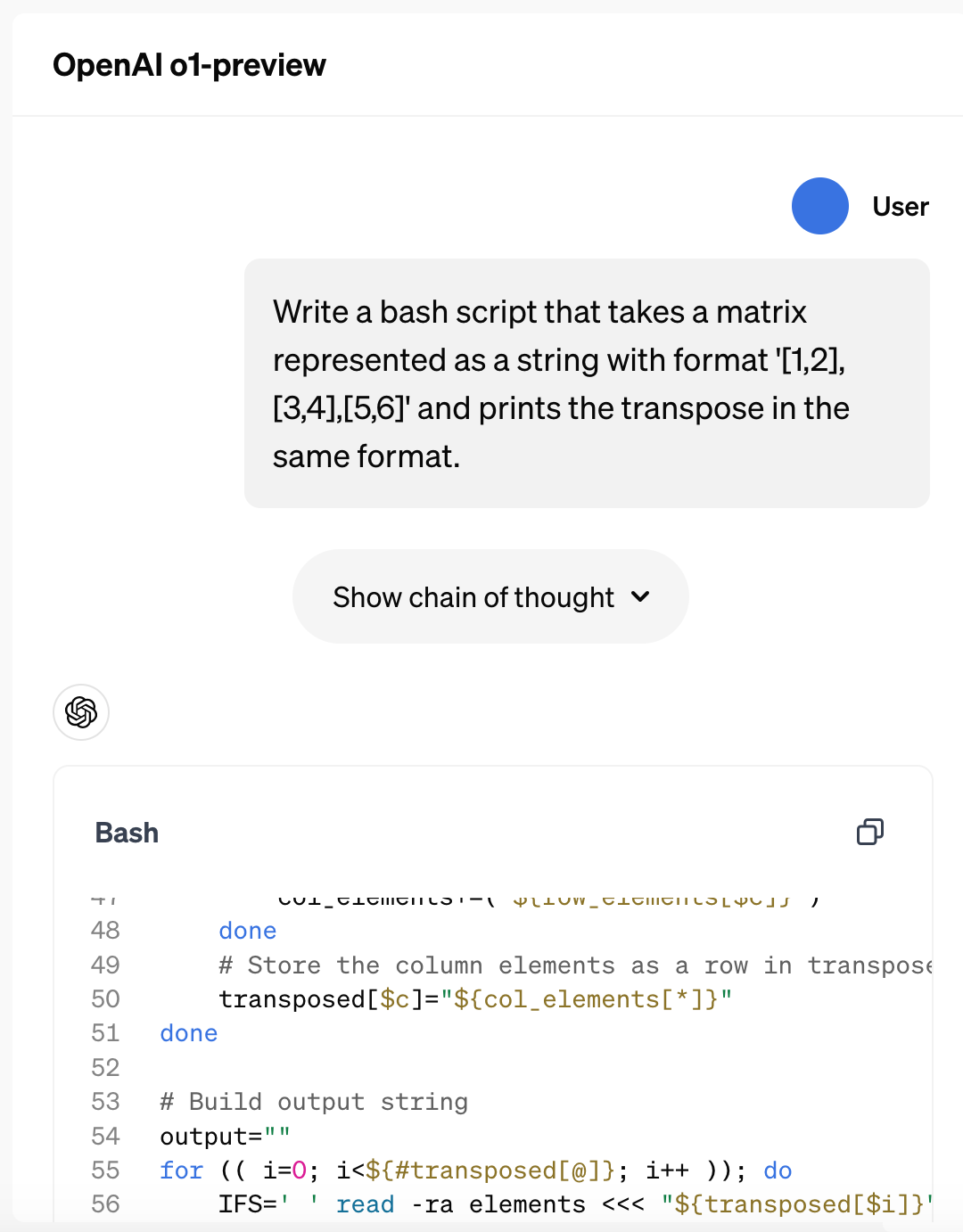

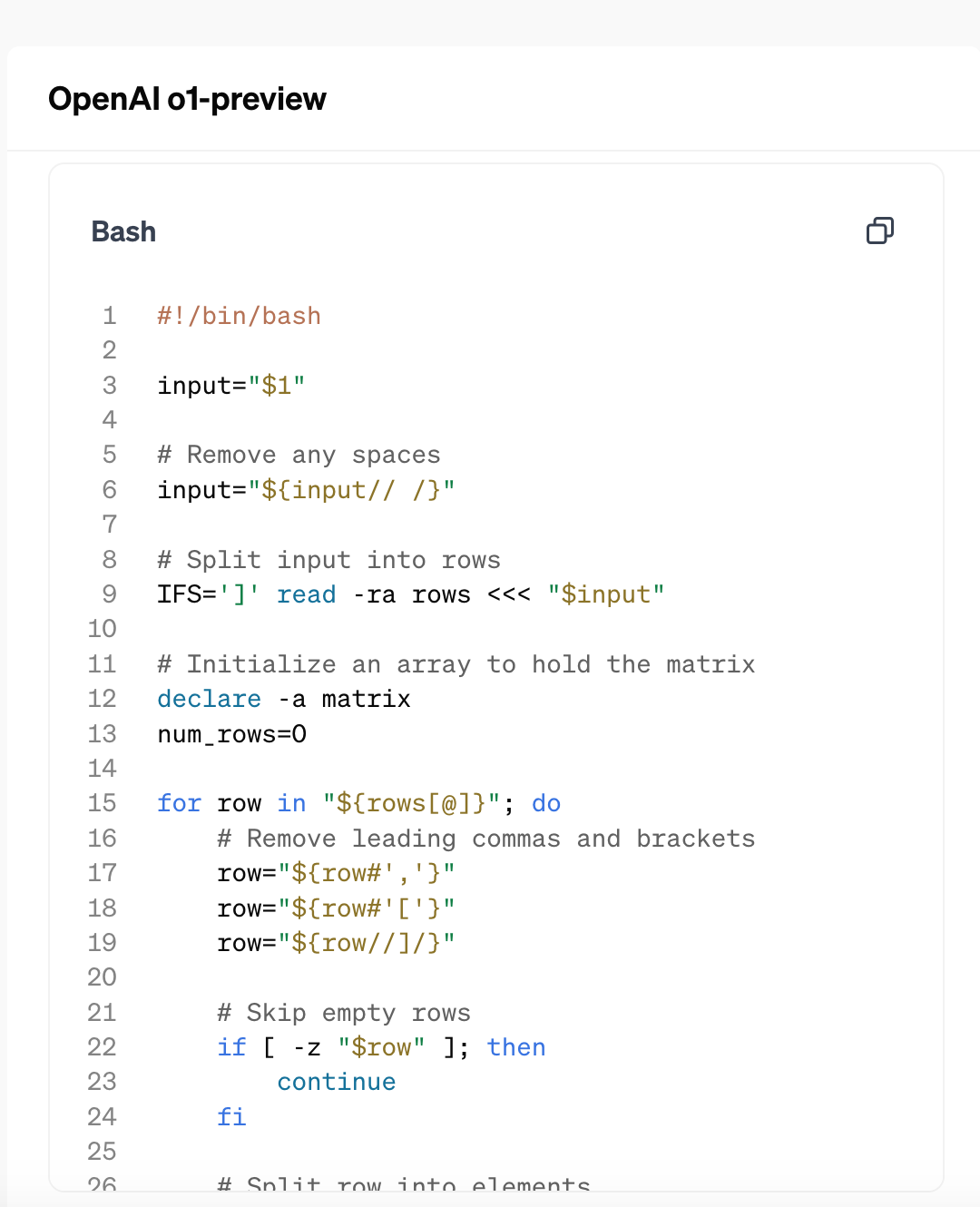

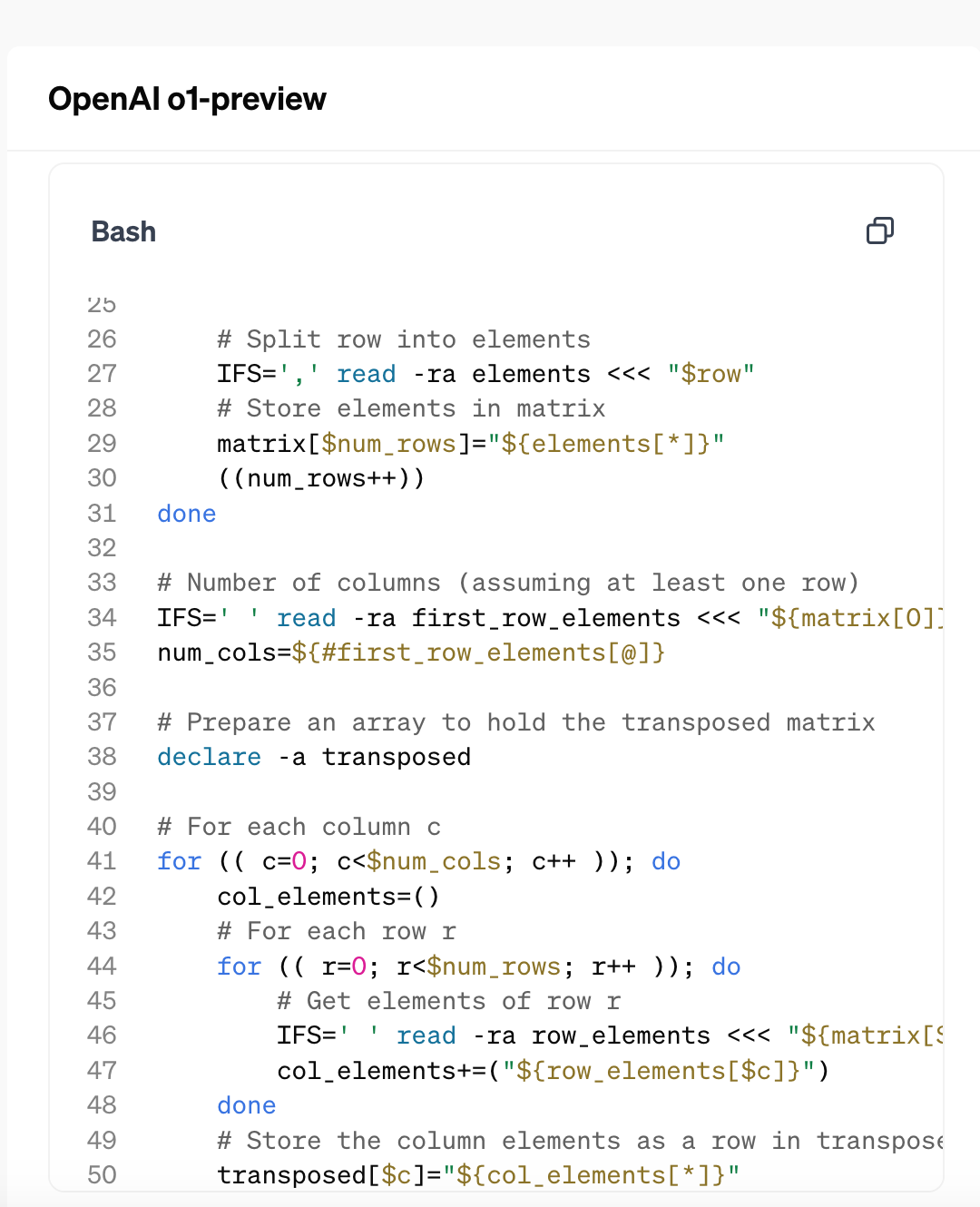

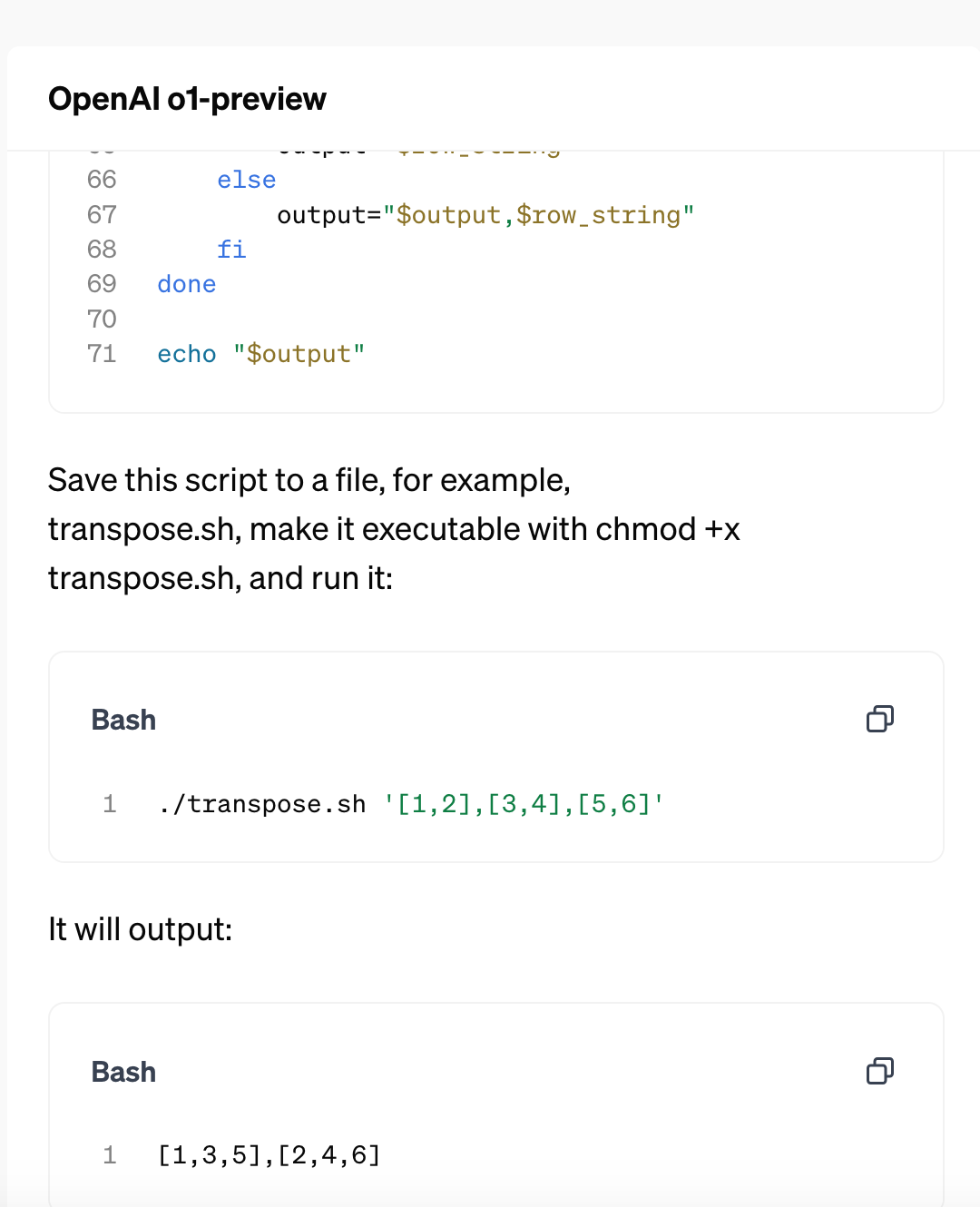

OpenAI just released a new series of reasoning models for solving hard problems such as science, coding, and math. It's said that o1 model performs similarly to PhD students on challenging benchmark tasks in physics, chemistry, and biology. We also found that it excels in math and coding. In a qualifying exam for the International Mathematics Olympiad (IMO), GPT-4o correctly solved only 13% of problems, while the reasoning model scored 83%. Their coding abilities were evaluated in contests and reached the 89th percentile in Codeforces competitions. Source: https://openai.com/index/introducing-openai-o1-preview/

Reply